Recently published PwC report on Responsible AI

Version 1.0.0

.jpg)

Introduction

A recent PwC report on ‘Responsible AI’ asked over 800+ respondents several questions about the responsible adoption and use of AI in their organization. The report is a glimpse into how these organizations adopt and use AI and what drives them. I wanted to add value with my thoughts, and this is what we will delve into in the blog post. My approach was to dissect and distill the report's information and present this with my thoughts and comments to provide a broader scope and viewpoint.

The PwC report highlights the growing recognition that AI is not merely a technological innovation but has social and ethical responsibility elements. This adds complexity for organizations grappling with ensuring AI systems are developed and deployed responsibly, considering fairness, transparency, and accountability factors. In some jurisdictions like the EU, this is not just a moral, social, or medical decision but alos a regulatory one, whereas in EU member states, the EU act is now enforced, and there will be penalties down the line for not being compliant.

Oe of the many key takeaways from the report is the need to emphasize a human-centered approach to AI. Organizations are realizing that AI should augment human capabilities rather than replace them. This mindset shift is essential for fostering a culture of collaboration and trust between humans and AI systems.

Another critical finding and takeaway is the increasing focus on data quality, governance, and security for AI. Organizations recognize that the quality of data used to train AI models directly impacts their fairness and accuracy. As a result, there's a growing emphasis on establishing robust data governance frameworks to ensure data integrity and minimize bias. Orgnization realizes the requirements for robust governanence models to safeguard against regulatory breaches. And also the requirements for safe AI for its employees, clients and customers.

The report also highlights the importance of continuous learning and improvement in AI systems. Organizations are adopting agile development practices to rapidly iterate on AI models, incorporating feedback from users and stakeholders. This iterative approach enables organizations to refine and enhance their AI systems over time, ensuring they remain aligned with changing business needs and societal expectations.

Is AI all hype and a bubble waiting to burst?

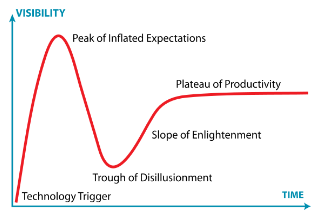

The report would suggest a definitive no; the negative press we are seeing is more like the natural hype curve thet most technologies take, and it seems with AI, we are heading into the through of disualiusement. To be expected this is where people begin to understand the limitations of any technology and realize thet it is not going to fix everything. This realization that AI is not going to fix everything leads to some thinking that the technology will fail and not use the value of the technology and where its actual value is, reflected in the negative press about the technology. The good thing is once the thought of disualiusement is over people start to apply where the technology is of use and value, and this we should begin to see by early next year given the current speed of AI through the type cycle.

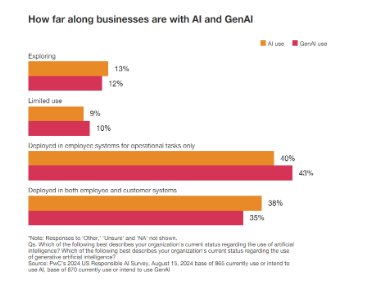

The report validates that AI is not just hype or a bubble; over 40% of 800+ respondents already deployed AI projects with clients and customers. And over 40% of respondents have AI deployed somewhat internally with employees. This is a high number of AI implementations, and sure in here, we can expect some failures. But it alos suggests that orgnizations are embracing AI, eager to explore how it can benefit their orgnizations; in the same way, they have adopted the public cloud and reaped the benefits. The vital key here is that orgnizations use technology, explore, and find value.

We can see from the responses to the question regarding ‘How far along the business are with AI and GenAI’ that orgnizations are not sitting on the sideline waiting to see what is happening but rather taking an adoptive approach. I expect the approach by orgnizations to be exploratory, understanding how they can get the best benefit from AI, so we will see an explore phase, an analysis phase, and an implementation phase as organizations move through the stages to final AI implementations.

The conclusion is that organizations are embracing AI at some level, or at least plan to, and this means we will start to see organizations find where AI value is, moving to permanent real-world usage of AI and related technologies. And pushing aside the idea thet AI will fail ort it's just another DotCom bubble.

Conclusion

In closing, the PwC report on "Responsible AI" reveals critical takeaways for organizations navigating the adoption of AI. First, there's a growing emphasis on AI's ethical and social responsibilities, particularly around fairness, transparency, and accountability. A human-centered approach is critical, with AI seen as a tool to augment human capabilities rather than replace them.

Data quality, governance, and security are paramount, as they directly influence the fairness and accuracy of AI systems. Continuous learning and iterative improvement are also crucial, with agile development practices helping organizations refine their AI models.

Finally, the report dispels the notion that AI is just hype. With over 40% of respondents already deploying AI, it's clear that organizations are actively exploring and implementing AI, positioning it as a key component of their future strategies. This proactive approach suggests that AI is here to stay, driving real value and innovation across industries.