Revolutions in Frontend Development with AI

.jpg)

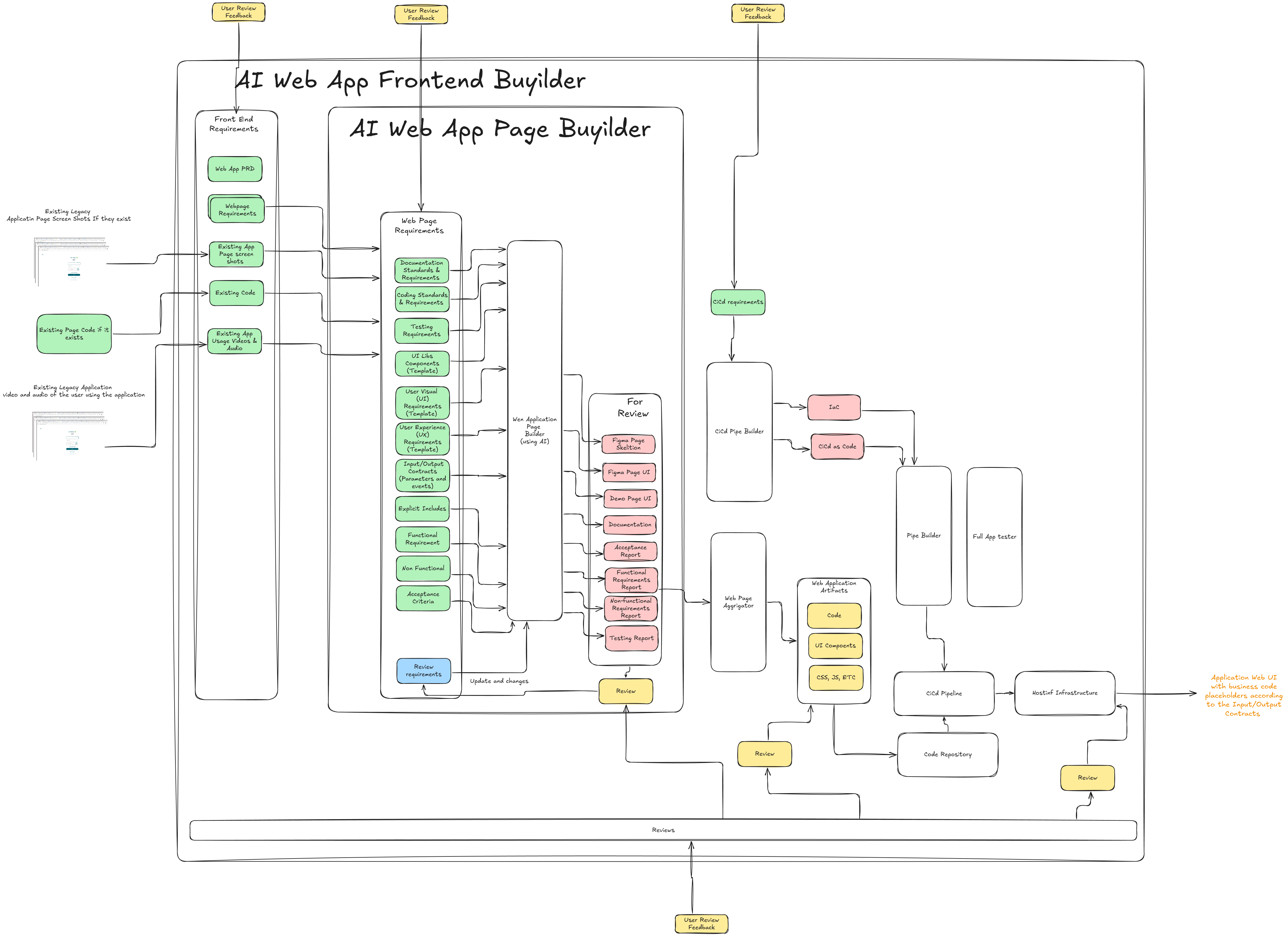

Frontend development is on the brink of a revolution, and the key to this transformation is artificial intelligence. What if you could provide a high-level description of an application's user interface, or a video and audio of a user using a legacy application from the era of ASP Classic or VB6, and have a fully functional, production-ready frontend built for you? The days of manual, line-by-line coding are fading fast. A new era is emerging where AI doesn't just assist developers, but takes the lead in generating code, running tests, and managing deployment.

This isn't just about faster development; it's about a complete shift in how we build, test, and deploy applications. By leveraging AI, we can move from a human-driven process to a highly streamlined, AI-driven workflow that turns ideas into reality with unprecedented speed and accuracy. In this post, we'll dive deep into a groundbreaking, end-to-end process that leverages AI to build frontends, from interpreting complex requirements to deploying the final application.

The What

"The What" defines the set of inputs that provide an AI with all the information it needs to understand a project's goals and build a frontend application. These inputs can be broken down as follows:

Existing Assets: For brownfield (existing) applications, this includes screenshots of app pages and the existing page code in any language. This gives the AI a visual and structural reference point.

Video and Audio: Video and audio capture a user using an existing brownfield app. For greenfield (new) projects, other input sources are used, such as the UI description from a product owner, user experience from a product designer, and UI component standards and practices. The AI uses these inputs to understand the user's intent and functional requirements.

Product Requirements: For both brownfield and greenfield projects, the user must define the product requirements and user flows in a Product Design Document (PDD). The AI interprets these requirements to build the frontend, rather than performing a direct one-to-one conversion.

Data Contracts: Input/output contracts for the UI specify the placeholders for business-related code, which might reside in the backend.

User Experience (UX) Definitions: These are details about the desired user experience, such as loading placeholders and animations, which help the AI understand the interactive and visual aspects of the application.

Acceptance Criteria: These are the specific, measurable goals for each page. The AI uses these criteria for testing and validation throughout the development process.

Technical Stack: The development framework, languages, and best practices provide the AI with the technical constraints and guidelines it must follow.

The How:

"The How" describes the end-to-end automated process for building, testing, and deploying a frontend application using an AI system. The process begins by treating each application page as a single, self-contained unit for development. The AI system uses the inputs described in "The What" to analyze the project's requirements, generate all necessary artifacts, and manage the entire workflow. This system integrates seamlessly with existing development practices, ensuring a collaborative and traceable process from start to finish.

Step 1: Automated Analysis and Artifact Generation

The AI system ingests all the inputs from "The What" section, with a particular focus on how video and audio are used to capture the nuances of user interaction and design. The AI employs advanced multimodal models to interpret this data and produce a comprehensive understanding of the project's goals.

Analysis of Video Inputs: The system processes video recordings of users interacting with a legacy or brownfield application. Using computer vision techniques, the AI analyzes each frame to identify and track user actions, such as mouse clicks, scrolling, and form field entries. It detects the visual hierarchy, component layouts, and design patterns of the existing application pages. This provides a dynamic, real-world understanding of the user flow, revealing how different screens connect and what actions a user performs to complete a task.

Interpretation of Audio Inputs: The AI uses speech-to-text and natural language processing (NLP) to transcribe and analyze the audio accompanying the video. This audio often contains user descriptions of their actions ("I'm clicking on the submit button now"), their expectations ("This field should be pre-populated with my address"), and the rationale behind their choices. The AI correlates these spoken instructions with the visual events in the video to create a rich, contextual understanding of user intent and functional requirements.

Using this comprehensive understanding, the AI generates a series of key artifacts:

Source Code: The AI writes the complete frontend code for each page, adhering to the specified technical stack (e.g., Next.js, Blazor, Angular) and using specified UI component libraries (e.g., Shadcn). The code structure, visual layout, and interactive components are directly informed by the analysis of the user video.

Automated Test Code: The system automatically generates robust test suites, including unit tests to validate individual functions and components and integration tests to ensure different parts of the application work together correctly. These tests are based on the acceptance criteria provided, as well as the detailed user flow and interactions observed in the video and audio inputs.

Infrastructure as Code (IaC): The AI generates the necessary IaC scripts (e.g., using Terraform, CloudFormation, or AWS CDK) to automatically provision the required cloud infrastructure in the target environment (e.g., AWS, Kubernetes, ECS). This IaC includes the configuration for the CI/CD pipeline itself.

Comprehensive Documentation: The system produces detailed and accurate documentation for all generated code and infrastructure. This documentation is crucial for bridging the knowledge gap for human developers who will later maintain the codebase. It includes not only code comments but also explanations of design choices and user flows derived directly from the video and audio analysis, ensuring the rationale for the AI's decisions is clear and transparent.

After generating all the artifacts, the AI initiates an automated Continuous Integration/Continuous Deployment (CI/CD) pipeline.

Infrastructure Provisioning: The pipeline first uses the generated IaC to automatically set up the entire development and testing environment in the cloud. This includes everything from virtual machines to networking configurations, ensuring a consistent and reproducible environment for every build.

Automated Testing: The pipeline automatically runs all the test code generated in the previous step. This includes:

Unit Tests: To verify that small, isolated pieces of code function as expected.

Integration Tests: To ensure that different components and services interact correctly.

User Acceptance Tests (UAT): The AI uses the acceptance criteria and the user interaction patterns observed in the video and audio as a benchmark to validate that the generated application pages meet the specific, measurable goals defined by the user.

Deployment: If all tests pass successfully, the pipeline automatically deploys the frontend application to the newly provisioned cloud environment. This ensures that a fully tested and functional version of the application is always available.

Step 3: Collaborative Review and Iteration

The process isn't a "fire and forget" operation. It's a continuous feedback loop where human developers collaborate with the AI.

Review Points: At key stages, the generated artifacts—code, documentation, and the running application—are presented to human developers for review. This is a critical feedback mechanism.

Developer Feedback: Developers provide feedback on the AI's output. This could be anything from a simple UI change, like making a button green instead of red, to more complex feedback on code structure or performance.

AI Refinement: The AI system uses this feedback to refine its output, automatically making the requested changes and regenerating the code, tests, and documentation. This iterative process continues until the application meets all requirements and achieves 100% acceptance.

This end-to-end process ensures that every change is traceable, and the final result is a high-quality, well-documented frontend that is fully integrated into the existing development workflow, ready for maintenance and further development.

The Output

The output of this AI-driven frontend development process is a comprehensive suite of artifacts, not limited to just the executable code. These outputs collectively represent a fully realized, tested, and documented frontend application, ready for integration with backend services, deployment, and ongoing maintenance.

Executable Frontend Code: This is the core deliverable: production-ready, functional frontend code (e.g., Next.js, Blazor, Angular) that directly implements the product requirements, user flows, and technical specifications. It incorporates specified UI component libraries (e.g., Shadcn) and reflects the visual and interactive nuances captured from video and audio analysis.

Automated Test Suites: A complete set of automated tests, including:

Unit Tests: Validating the functionality of individual components and functions.

Integration Tests: Ensuring seamless interaction between different parts of the application.

User Acceptance Tests (UAT): Automated scripts that verify the application meets the defined acceptance criteria and replicates observed user interaction patterns.

Infrastructure as Code (IaC): Declarative scripts (e.g., Terraform, AWS CloudFormation, AWS CDK) that define and provision all necessary cloud infrastructure for the application's development, testing, and production environments. This includes compute resources, networking, databases, and the CI/CD pipeline configuration itself.

Comprehensive Documentation: Detailed and accurate documentation that bridges the gap between AI-generated code and human understanding. This encompasses:

Inline code comments.

Explanations of design choices and architectural patterns derived from AI analysis.

Diagrams and descriptions of user flows, directly informed by video and audio inputs, provide a clear rationale for the AI's decisions.

Deployment guides and operational instructions.

Integration

The AI-driven frontend development system is designed for seamless integration with existing enterprise tooling and systems, ensuring a cohesive and automated software development lifecycle.T

Infrastructure in AWS

The system leverages AWS for cloud infrastructure provisioning and management.

IaC Generation: The AI generates Infrastructure as Code (IaC) using AWS-native tools like AWS CloudFormation or AWS CDK, or cloud-agnostic tools like Terraform. These scripts define all necessary AWS resources.

Automated Provisioning: During the CI/CD pipeline's infrastructure provisioning step, these IaC scripts are executed to automatically deploy and configure AWS services. This includes:

Compute: Amazon EC2 instances, AWS Lambda functions, Amazon ECS/EKS clusters for containerized applications.

Storage: Amazon S3 for static assets, Amazon RDS for databases.

Networking: Amazon VPC, Load Balancers (ALB/NLB), Amazon Route 53 for DNS.

Content Delivery: Amazon CloudFront for global content delivery and caching.

Environment Consistency: IaC ensures that development, testing, and production environments are consistently provisioned and configured, reducing discrepancies and "it works on my machine" issues.

CI/CD Pipelines

The AI system fully automates the CI/CD process, integrating with robust pipeline platforms.

Pipeline Definition: The AI generates the CI/CD pipeline configuration itself as part of the IaC, defining stages for building, testing, and deploying.

Integration Platforms: This integrates with platforms such as:

AWS CodePipeline / CodeBuild / CodeDeploy: For a fully AWS-native CI/CD workflow.

GitLab CI/CD: If GitLab is used as the code repository, its integrated CI/CD capabilities can be leveraged.

GitHub Actions: For repositories hosted on GitHub, enabling automated workflows directly within the repository.

Jenkins: For on-premise or self-hosted CI/CD environments.

Automated Stages: The pipeline automates:

Source Code Checkout: Retrieving the AI-generated code from the repository.

Build: Compiling and packaging the frontend application.

Automated Testing: Running unit, integration, and UAT tests generated by the AI.

Deployment: Deploying the tested application to the designated AWS environment.

Code Repositories

All generated code and documentation are managed within version control systems.

Version Control Systems (VCS): The AI pushes its generated artifacts (source code, test code, IaC, documentation) to popular VCS platforms:

GitHub / GitLab / Bitbucket: For cloud-hosted or self-hosted Git repositories.

AWS CodeCommit: For an AWS-native Git repository solution.

Branching Strategy: The AI adheres to a defined branching strategy (e.g., GitFlow, Trunk-Based Development), creating feature branches for new development and pull/merge requests for review.

Traceability: Every AI-generated change is committed with clear commit messages, providing a traceable history of development.

Slack

Slack serves as a central hub for real-time communication and notifications.

CI/CD Notifications: Automated notifications are sent to designated Slack channels for:

Build status (success/failure).

Deployment status (success/failure, environment deployed to).

Test results summaries.

Review and Feedback: When the AI presents artifacts for human review (as per Step 3), a notification is sent to Slack, including links to the generated code, documentation, and the running application.

Developer Feedback Loop: Developers can provide direct feedback to the AI via specific Slack commands or by interacting with linked project management tickets, which the AI then ingests for refinement.

Ticket and Tooling for Sprints

Project management and sprint tracking are integrated to ensure alignment with agile methodologies.

Project Management Tools: The system integrates with tools like:

Jira: For creating, updating, and resolving tickets.

Asana / Trello: For task management and sprint boards.

Automated Ticket Creation: The AI can automatically create tickets for:

Review Points: When new features or pages are ready for human review.

Bugs/Issues: If automated tests identify failures or discrepancies.

Feature Progress: Updating the status of tasks as code is generated, tested, and deployed.

Artifact Linking: Tickets are automatically linked to the relevant AI-generated code, documentation, and deployed application instances, providing a single source of truth for each task.

Sprint Tracking: Product owners and development teams can track the progress of AI-generated features within their existing sprint planning and execution workflows.

Capture of Artifacts from Product Owners

The system provides structured mechanisms for product owners to input requirements.

Product Design Document (PDD): Product owners input detailed product requirements and user flows into a structured PDD, which can reside in platforms like:

Confluence: For collaborative documentation.

Google Docs / Microsoft SharePoint: For shared document management.

Dedicated Requirement Management System: A custom portal or existing enterprise tool designed for requirements capture.

UX Definitions & Acceptance Criteria: These are captured alongside the PDD or within the project management tool (e.g., as detailed descriptions in Jira tickets), allowing the AI to ingest them directly.

Existing Assets (Brownfield): Screenshots and existing page code are ingested directly by the AI, potentially uploaded via a secure portal or retrieved from existing code repositories.

Video and Audio Inputs: A dedicated capture mechanism allows product owners or UX researchers to upload video and audio recordings of user interactions, which the AI then processes for detailed behavioral and contextual understanding.

This integrated ecosystem ensures that the AI-driven frontend development process is not an isolated component but a deeply embedded and collaborative part of the overall software delivery pipeline.

Product Design Document (PDD): Product owners input detailed product requirements and user flows into a structured PDD, which can reside in platforms like:

Confluence: For collaborative documentation.

Google Docs / Microsoft SharePoint: For shared document management.

Dedicated Requirement Management System: A custom portal or existing enterprise tool designed for requirements capture.

UX Definitions & Acceptance Criteria: These are captured alongside the PDD or within the project management tool (e.g., as detailed descriptions in Jira tickets), allowing the AI to ingest them directly.

Existing Assets (Brownfield): Screenshots and existing page code are ingested directly by the AI, potentially uploaded via a secure portal or retrieved from existing code repositories.

Video and Audio Inputs: A dedicated capture mechanism allows product owners or UX researchers to upload video and audio recordings of user interactions, which the AI then processes for detailed behavioral and contextual understanding.